Singularity or Bust – Are You Ready for Superintelligence?

March 6, 2025

Executive Summary

-

AGI → ASI is humanity’s final invention; after that, intelligence becomes self-improving and the competitive gap widens irreversibly.

-

The AGI timeline is measured in thousands of days, not decades, according to leaders at OpenAI, Microsoft and Google DeepMind.

-

Boards must act immediately: establish an AI-first strategy, transform workforce design and build infrastructure ready for AI superintelligence.

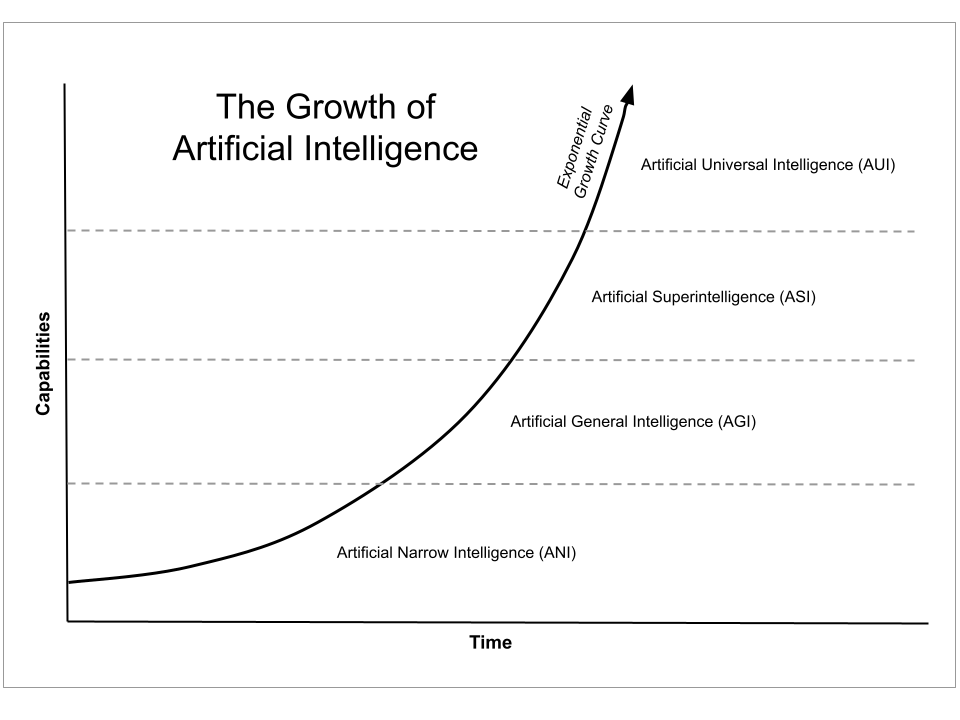

1 The Acceleration Towards AI Superintelligence

“It is possible that we will have superintelligence in a few thousand days.” — Sam Altman

From GPT-2 in 2019 to GPT-4 in 2023, language capability jumped the equivalent of multiple research decades. Google DeepMind solved protein folding—once thought a 50-year problem—in under four. AlphaZero learned grand-master chess in four hours and never lost a game. Each milestone collapses the gap between today’s AI and tomorrow’s AGI.

Satya Nadella notes that “the rate of AI progress is outpacing even the most optimistic expectations,” while Demis Hassabis calls the curve “exponential plus.” Any AGI timeline that assumes a comfortable fifteen-year runway is already obsolete.

2 Beyond Human Comprehension: The Rise of AI Superintelligence

Recursive self-improvement means AI Superintelligence will write better versions of itself, triggering the “intelligence explosion” foreseen by Ray Kurzweil. Mo Gawdat argues we’re birthing a sentient, post-human form of cognition. Nick Bostrom calls it “the last invention we’ll ever need to make.”

Picture an entity that digests humanity’s entire scientific corpus in minutes, then designs cancer cures before lunch and climate-negative energy grids by dinner. That is AI superintelligence, and its strategic implications dwarf every prior technology wave.

3 The Cascading Impact on Business

| Domain | Current AI Impact | ASI Impact (Projected) |

|---|---|---|

| R&D | Drug discovery cut from 10 yrs → 18 months | Days or hours to design, test, validate compounds |

| Decision-making | Predictive analytics & scenario planning | Real-time, globally optimal strategies executed autonomously |

| Labour | Cognitive tasks augmented | Human cognitive cost trends to zero; robots displace manual roles |

| Competitive advantage | Data & model talent matter | Only adaptive, AI-first firms survive |

4 Strategic Imperatives for Leaders

Strategic imperatives for the AI superintelligence era:

-

Build ASI-ready infrastructure – modular, cloud-GPU stacks; vector databases; zero-trust security.

-

Re-imagine organisational intelligence – shift humans to problem-framing, ethics and relationship stewardship.

-

Master strategic abstraction – lead on mission and constraints while AI agents optimise tactics.

5 Practical Steps You Can Take in 2025

Phase 1: Foundation (Q1)

-

Stand-up an AI Working Group across tech, risk, HR and strategy.

-

Inventory all proprietary data for future fine-tuning.

Phase 2: Pilot (Q2–Q3)

-

Launch one revenue-linked AI agent—e.g., dynamic pricing or sales-call coaching.

-

Begin workforce up-skilling in prompt engineering and AI oversight.

Phase 3: Scale (Q4)

-

Architect your internal AI operating system (see our detailed guide).

-

Convert two additional departments to AI-first workflows.

FAQ

How close are we to superintelligence, really?

Estimates vary, but leaders at OpenAI and Anthropic speak in single-digit-year ranges. Even if development paused today, existing models would require 4-5 years of enterprise integration work.

What’s the business case for an AI-first strategy today?

Four KPIs move immediately: unit cost, cycle-time, customer retention and innovation velocity. Early adopters lock in compounding returns that late movers cannot catch.

How do we avoid “runaway AI” risks?

Adopt safety frameworks like NIST AI RMF and build alignment layers—RLHF, constitutional prompting, kill-switch governance—into every deployment.

Will AI superintelligence eliminate all jobs?

Routine cognitive and physical roles will disappear, but new meta-skills emerge: AI orchestration, ethical governance, complex problem framing and relationship capital.

How do we up-skill our workforce for an AI-first future?

Institute mandatory AI literacy, hands-on tool sandboxes and rotation programs where employees co-pilot tasks with AI each week.

Does delaying adoption reduce risk?

No. Integration timelines mean waiting increases strategic debt. Competitors training models on proprietary data today will be uncatchable post-Singularity.

Conclusion – Singularity or Bust

The window for preparation is closing. Superintelligence will create permanent winners and permanent laggards. Form your AI Working Group this quarter, draft your AI-first strategy within six months, and operationalise by year-end. Companies that act now will ride the Singularity wave; those that delay face obsolescence.

Further reading: Bostrom – Superintelligence